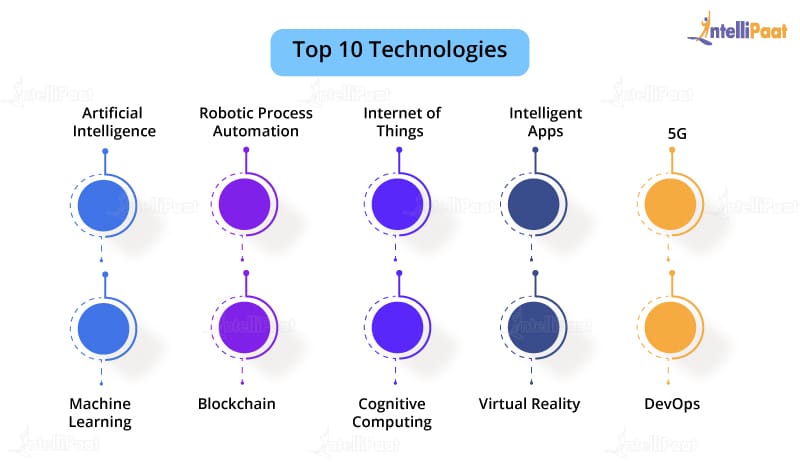

Top technology trends today

Let us discuss these technologies in brief:

1. Artificial Intelligence (AI) and Machine Learning (ML)

Artificial intelligence (AI) refers to the ability of a machine or computer system to perform tasks that would normally require human intelligence, such as learning, problem-solving, and decision-making. AI can be divided into two main categories: narrow and general. Narrow AI is designed to perform a specific task, while general AI is designed to perform a wide range of tasks. There are also different types of AI, including supervised and unsupervised learning, reinforcement learning, and deep learning.

AI has the potential to revolutionize many industries, including healthcare, transportation, and manufacturing. It has already been used to develop self-driving cars, improve medical diagnoses, and assist with a wide range of other tasks. However, there are also concerns about the ethical and social implications of AI, including the potential for job loss and the potential for biased or unfair decision-making.

Machine learning(ML) is a type of artificial intelligence that allows computers to learn and make decisions based on data input, without being explicitly programmed to do so. It involves the use of algorithms and statistical models to analyze and interpret data in order to make predictions or decisions. Machine learning is used in various industries, such as finance, healthcare, and marketing, to improve efficiency and accuracy in decision-making processes. Machine learning algorithms can be divided into three main categories: supervised learning, unsupervised learning, and reinforcement learning. In supervised learning, the computer is given a set of labelled data, which it uses to learn a task. In unsupervised learning, the computer is given a set of unlabeled data and must learn to find patterns and relationships in the data. In reinforcement learning, the computer is given a set of rules and must learn to make decisions based on those rules in order to achieve a goal.

2. Robotic Process Automation (RPA)

Robotic process automation (RPA) is a technology that allows organizations to automate repetitive, rules-based digital tasks. It involves the use of software, often referred to as a "robot," to perform tasks that would normally be completed by a human.

RPA can be used to automate a wide range of business processes, including data entry, customer service, finance and accounting. It is particularly useful for highly repetitive tasks, requires a high level of accuracy, and involves the processing of large volumes of data.

RPA is designed to be easy to use and requires little to no programming knowledge. It is often used in conjunction with other technologies, such as artificial intelligence (AI) and machine learning, to create more advanced automation solutions.

Overall, RPA can help organizations increase efficiency, reduce errors, and free up employees to focus on more value-added activities.

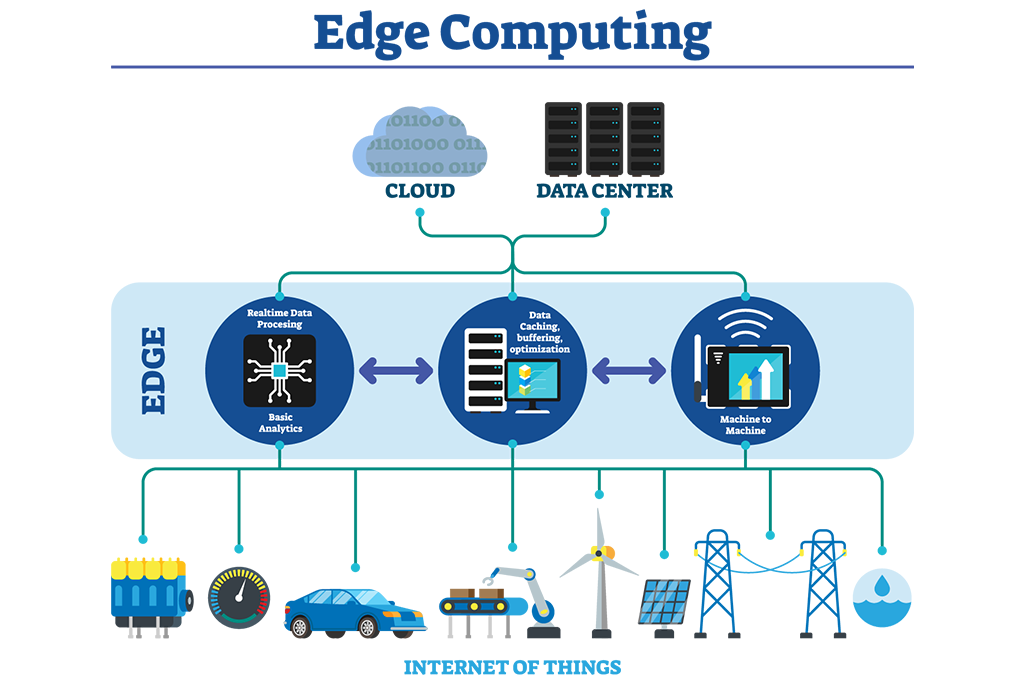

3. Edge Computing

Edge computing is a distributed computing model in which data processing and analysis occur at or near the source of the data, rather than in a central location such as a data centre or the cloud.

In edge computing, data is processed and analyzed by devices or systems located at the edge of a network, such as sensors, Internet of Things (IoT) devices, or other types of smart devices. This can enable faster processing and analysis of data, as it does not need to be transmitted over long distances to a central location for processing.

Edge computing can be useful in a variety of applications, including real-time analysis of data from sensors or IoT devices, and in situations where low latency or high reliability are required. It can also be useful in environments where there is limited or unreliable connectivity, as data can be processed locally rather than relying on a connection to the cloud.

Overall, edge computing can help organizations improve the efficiency and effectiveness of their data processing and analysis, and enable the use of new applications and technologies that require fast, reliable access to data.

4. Quantum Computing

Quantum computing is a type of computing that uses quantum-mechanical phenomena, such as superposition and entanglement, to perform operations on data. It is based on the principles of quantum mechanics, a branch of physics that deals with the behaviour of very small particles, such as atoms and subatomic particles.

In a traditional computer, data is represented as bits, which can have a value of 0 or 1. In a quantum computer, data is represented as quantum bits or qubits. Qubits can exist in multiple states at the same time, known as superposition, and can be entangled with other qubits, meaning that the state of one qubit can be correlated with the state of another qubit, even if they are separated by large distances.

These quantum-mechanical phenomena allow quantum computers to perform certain types of operations much faster than traditional computers. They have the potential to solve certain problems that are difficult or impossible for traditional computers to solve, such as breaking certain types of encryption or simulating complex quantum systems.

Quantum computers are still in the early stages of development, and there are many technical challenges to overcome before they can be used for practical applications. However, they have the potential to revolutionize a wide range of fields, including cryptography, drug discovery, and materials science.

5. Virtual Reality (VR) and Augmented Reality (AR)

Virtual reality (VR) and augmented reality (AR) are technologies that enable users to interact with digital content in immersive and interactive ways.

VR refers to the use of computer-generated simulations to create a fully immersive experience in which users feel as if they are physically present in a virtual environment. VR systems typically include a headset with a display screen and sensors that track the user's head movements and allow them to look around the virtual environment as if they were actually there. VR can be used for a variety of applications, including gaming, training, and therapy.

AR refers to the use of technology to overlay digital content onto the physical world in real time. AR systems often use a device with a camera, such as a smartphone or a headset, to display digital content on top of a real-world environment. This can be used to add information, graphics, or other digital content to the user's view of the real world. AR can be used for a variety of applications, including education, entertainment, and advertising.

Overall, VR and AR technologies have the potential to transform how we interact with the digital world and can be used to create new and innovative experiences.

6. Blockchain

A blockchain is a distributed database that is used to maintain a continuously growing list of records, called blocks. Each block contains a timestamp and a link to the previous block, forming a chain of blocks.

Blockchains are designed to be secure and transparent, making them well-suited for maintaining records of financial transactions and other sensitive information. They use cryptography to ensure the integrity of the data and to allow multiple parties to access and verify the records without the need for a central authority.

One of the most well-known applications of blockchain technology is the creation of digital currencies, such as Bitcoin. However, blockchains have the potential to be used for a wide range of applications, including supply chain management, voting systems, and the management of the intellectual property.

Overall, blockchain technology has the potential to revolutionize how we store and manage data and could have significant implications for a wide range of industries and applications.

7. Internet of Things (IoT)

The Internet of Things (IoT) is a network of physical objects, devices, and systems that are connected to the internet and are able to communicate and exchange data with each other. These objects can include everyday items such as appliances, vehicles, and home security systems, as well as industrial equipment and devices. The IoT allows for the automation and remote control of these objects, allowing for greater efficiency and convenience. For example, a smart thermostat can automatically adjust the temperature in a home based on the preferences of the user, and a smart refrigerator can send a notification to a phone when it is time to restock certain items. The IoT has the potential to revolutionize many industries, including healthcare, transportation, and energy.

8. 5G

5G is the fifth generation of wireless technology for mobile communication, which is designed to provide faster speeds and higher capacity than previous generations. 5G networks are expected to provide download speeds of up to 1 Gbps, which is much faster than 4G or 3G networks. This increased speed and capacity will allow for the development of new technologies and applications, such as virtual and augmented reality, self-driving cars, and remote surgery. 5G technology is also expected to be more efficient and have lower latency (the delay between when a signal is sent and received) than previous generations, which will improve the overall user experience. 5G networks are being rolled out in many countries around the world, but the deployment of 5G technology has faced some controversy due to concerns about the potential health effects of the higher frequency radio waves used in 5G networks.

9. Cybersecurity

Cybersecurity is the practice of protecting computers, servers, mobile devices, electronic systems, networks, and data from digital attacks, theft, and damage. It involves the use of technologies, processes, and policies to secure networks, devices, and data from unauthorized access or attacks. Cybersecurity is important because it helps to protect sensitive and confidential information, as well as prevent disruptions to critical systems and services. It is a growing concern for individuals, businesses, and governments as the number and sophistication of cyber threats continue to increase. Some common types of cybersecurity measures include firewalls, antivirus software, two-factor authentication, and data encryption.

10. Full Stack Development

Full stack development is a term used to describe the skills and knowledge required to develop and maintain the front-end and back-end of a website or application. A full-stack developer is proficient in a variety of programming languages and technologies, including HTML, CSS, JavaScript, and back-end languages such as Python, Java, and PHP. They also have a strong understanding of databases, servers, and system administration. Full-stack developers can work on all aspects of a website or application, from the design and layout of the front end to the integration of the back-end systems. They are often responsible for the overall development and maintenance of a website or application and can work with different teams and stakeholders throughout the development process.

11. Computing Power

The digital era has computerised every mobile device and application, firmly establishing computing power in this generation. Data scientists have predicted that the infrastructure used to harness this computing power is only going to evolve in the coming years. Computing power is giving us advanced technology to make our lives better and is also creating more jobs in the tech industry. Fields like data science, data analytics, IT management, robotics, etc have the potential to create the largest percentage of employment in the country. Many international brands and companies hire from India because of the specialised training widely available in the country. The more computing our devices need, the more specialised professionals will be required and the economy will flourish as a result.

12. Datafication

Datafication is the process of turning data or information into a numerical form that can be easily analyzed and understood. It involves the collection and analysis of large amounts of data in order to extract insights and inform decision-making. Datafication is often used in industries such as marketing, finance, and healthcare to improve efficiency and accuracy in decision-making processes. Datafication can be achieved through the use of various technologies and tools, such as data analytics software, machine learning algorithms, and data visualization tools. It allows organizations to better understand and interpret data, and to make more informed and data-driven decisions.

13. Digital Trust

Digital trust refers to the level of confidence that individuals and organizations have in the security, privacy, and reliability of online systems and platforms. It is an important concept in the digital age, as more and more sensitive information is being shared and stored online. Digital trust is built through the implementation of strong security measures, transparent privacy policies, and a commitment to maintaining the reliability and integrity of online systems. It is essential for individuals and organizations to protect their digital assets and to ensure that their online activities are secure and private. Loss of digital trust can have serious consequences, including financial loss, reputational damage, and legal liability.

14. Internet of Behaviours

The Internet of Behaviour (IOB) refers to the use of data and analytics to understand and predict human behaviour, particularly in the context of online activities. The IOB involves the collection and analysis of data from various sources, such as social media, search engines, and online purchasing behaviour, in order to gain insights into how individuals and groups behave online. The IOB is used to inform marketing and advertising campaigns, as well as to improve the design and functionality of online platforms and products. It can also be used to identify and predict trends, preferences, and patterns of behaviour in order to better understand and target specific audiences. Some concerns have been raised about the potential for the IOB to be used to manipulate or exploit individuals, and about the potential for data privacy violations.15. Predictive analytics

Predictive analytics is the use of data, statistical algorithms, and machine learning techniques to identify the likelihood of future outcomes based on historical data. Predictive analytics is used in various industries, such as finance, healthcare, and marketing, to make informed decisions and predictions about future events. It involves the analysis of large amounts of data in order to identify patterns and relationships and to make predictions about future outcomes. Predictive analytics can be used to forecast trends, optimize marketing campaigns, and improve efficiency and productivity in various fields. It is often used in conjunction with other analytics techniques, such as descriptive analytics (which describes past events) and prescriptive analytics (which suggests actions to take based on data analysis).

16. DevOps

DevOps is a software development approach that aims to bring together the development and operations teams in order to improve the efficiency and speed of software delivery. It involves the use of tools and practices that automate the process of building, testing, and deploying software, and that allows teams to work more closely together in order to identify and resolve issues quickly. DevOps aims to increase collaboration between developers and operations teams and to improve the communication and coordination between these teams. It also aims to reduce the time and effort required to develop and maintain software, and to improve the reliability and stability of software products. Some common DevOps practices include continuous integration, continuous delivery, and continuous deployment.

17. 3D Printing

3D printing, also known as additive manufacturing, is a process of creating a three-dimensional object by depositing material layer by layer based on a digital design. 3D printing technology allows for the creation of complex and customized objects using a wide range of materials, including plastics, metals, and ceramics. 3D printing is used in a variety of industries, including manufacturing, healthcare, and architecture, and has the potential to revolutionize the way products are designed and made. 3D printing allows for the rapid prototyping of products and allows for the creation of objects with complex geometries that would be difficult or impossible to create using traditional manufacturing techniques. It also has the potential to reduce waste and improve sustainability in manufacturing processes.

18. AI-as-a-Service

AI as a service (AIaaS) refers to the delivery of artificial intelligence (AI) capabilities as a cloud-based service. AIaaS allows organizations to access and use AI technologies without the need to invest in infrastructure or resources. It is typically provided through a subscription model and can be accessed through a web browser or API. AIaaS is used in various industries, such as healthcare, finance, and marketing, to improve efficiency and accuracy in decision-making processes. It allows organizations to analyze large amounts of data and make predictions or decisions based on that data without the need for specialized in-house expertise or resources. Some common examples of AIaaS include natural language processing, image recognition, and predictive analytics.19. Genomics

The field of genomics uses technology can study the make-up of your DNA and genes, do their mapping, etc. They also help quantify your genes which makes it easier for doctors to find any possible health issues waiting in the dark. There are various technical and non-technical roles available in this field. Tech jobs will include analysis, design and diagnostics, while non-tech jobs will include theoretical analysis and research.

What should you do next?

The world of technology evolves rapidly and trends change quickly. As an IT professional, you need to stay on top of these trends to build a successful career. What’s more, if you are looking for one area of expertise to direct your career toward, understanding how each of these technologies works, together and by itself, will give you an edge over the other applicants applying for the same job as you.

You now know which technologies are going to make the greatest impact in the years to come. Keeping in mind the potential and promising career options they offer and your personal goals, start training in a technology you like. Get the early bird advantage and sharpen your skills so that you’re among the first to embrace these technologies.

Thanks for reading.

0 comments:

Post a Comment